Search Blogs

Top Test Automation Metrics: Essential KPIs to Boost QA Success and Efficiency

Introduction

In the fast-paced world of software development, where agile methodologies and continuous delivery are the norm, test automation has become an indispensable tool. It allows teams to rapidly validate software quality, catch bugs early, and ensure that new features don't break existing functionality. However, implementing test automation is just the first step. To truly harness its power and justify its investment, we need to measure its effectiveness and efficiency. This is where test automation metrics and Key Performance Indicators (KPIs) come into play.

A. Importance of test automation in modern software development

Test automation has revolutionized the software development lifecycle in several ways:

Speed and Efficiency: Automated tests can be run much faster and more frequently than manual tests, allowing for rapid feedback on code changes.

Consistency: Automated tests perform the same operations each time they run, eliminating human error and providing consistent results.

Coverage: Automation allows teams to run a wider range of tests, including complex scenarios that might be impractical to test manually.

Continuous Integration and Delivery: Automated tests are crucial for CI/CD pipelines, enabling teams to detect and fix issues quickly in the development process.

Resource Optimization: By automating repetitive tests, QA professionals can focus on more complex, exploratory testing scenarios.

B. The need for measuring test automation effectiveness

While the benefits of test automation are clear, it's not enough to simply implement automation and hope for the best. We need to measure its effectiveness for several reasons:

ROI Justification: Test automation requires significant upfront investment. Metrics help justify this investment by demonstrating tangible benefits.

Continuous Improvement: By tracking metrics, teams can identify areas where their automation strategy is working well and where it needs improvement.

Resource Allocation: Metrics can help teams decide where to focus their automation efforts for maximum impact.

Quality Assurance: Measuring the effectiveness of test automation helps ensure that it's actually improving software quality and catching bugs.

Process Optimization: Metrics can reveal bottlenecks in the testing process, allowing teams to optimize their workflows.

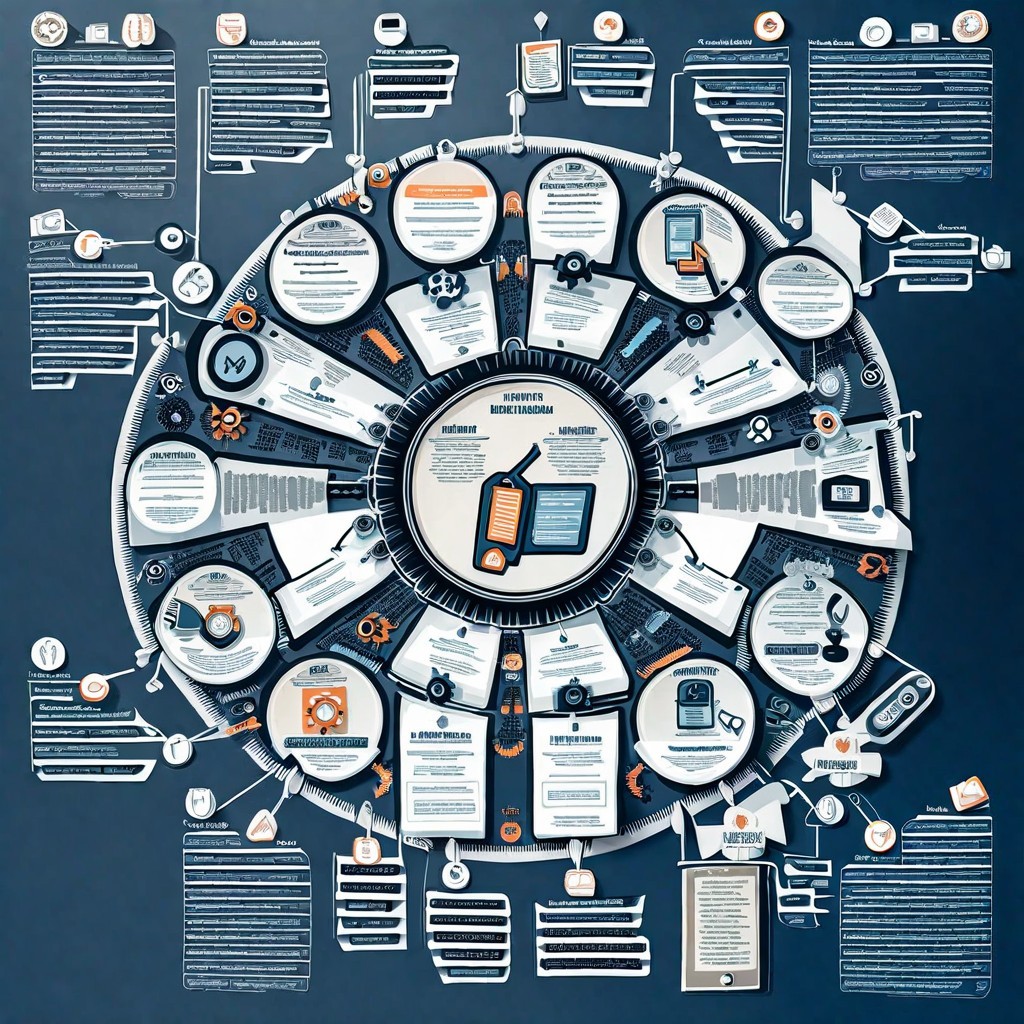

C. Overview of key metrics and KPIs

To effectively measure test automation success, teams should focus on a range of metrics and KPIs. These can be broadly categorized into:

Coverage Metrics: How much of your software is being tested?

Code coverage

Feature coverage

Requirements coverage

Execution Metrics: How efficiently are your tests running?

Test execution time

Pass/fail rates

Automated vs. manual test ratio

Defect Metrics: How effective are your tests at finding bugs?

Defect detection rate

Defect escape rate

Mean time to detect defects

Reliability Metrics: How stable and maintainable is your test suite?

Test flakiness rate

Test script maintenance effort

Test environment stability

ROI Metrics: What value is your test automation providing?

Cost savings from automation

Time saved through automation

Improved time-to-market

Performance Metrics: How does your software perform under test?

System response time

Resource utilization

Concurrent user load

In the following sections, we'll dive deep into each of these categories, exploring how to measure these metrics, what they mean for your testing process, and how to use them to drive continuous improvement in your software development lifecycle.

In the fast-paced world of software development, where agile methodologies and continuous delivery are the norm, test automation has become an indispensable tool. It allows teams to rapidly validate software quality, catch bugs early, and ensure that new features don't break existing functionality. However, implementing test automation is just the first step. To truly harness its power and justify its investment, we need to measure its effectiveness and efficiency. This is where test automation metrics and Key Performance Indicators (KPIs) come into play.

A. Importance of test automation in modern software development

Test automation has revolutionized the software development lifecycle in several ways:

Speed and Efficiency: Automated tests can be run much faster and more frequently than manual tests, allowing for rapid feedback on code changes.

Consistency: Automated tests perform the same operations each time they run, eliminating human error and providing consistent results.

Coverage: Automation allows teams to run a wider range of tests, including complex scenarios that might be impractical to test manually.

Continuous Integration and Delivery: Automated tests are crucial for CI/CD pipelines, enabling teams to detect and fix issues quickly in the development process.

Resource Optimization: By automating repetitive tests, QA professionals can focus on more complex, exploratory testing scenarios.

B. The need for measuring test automation effectiveness

While the benefits of test automation are clear, it's not enough to simply implement automation and hope for the best. We need to measure its effectiveness for several reasons:

ROI Justification: Test automation requires significant upfront investment. Metrics help justify this investment by demonstrating tangible benefits.

Continuous Improvement: By tracking metrics, teams can identify areas where their automation strategy is working well and where it needs improvement.

Resource Allocation: Metrics can help teams decide where to focus their automation efforts for maximum impact.

Quality Assurance: Measuring the effectiveness of test automation helps ensure that it's actually improving software quality and catching bugs.

Process Optimization: Metrics can reveal bottlenecks in the testing process, allowing teams to optimize their workflows.

C. Overview of key metrics and KPIs

To effectively measure test automation success, teams should focus on a range of metrics and KPIs. These can be broadly categorized into:

Coverage Metrics: How much of your software is being tested?

Code coverage

Feature coverage

Requirements coverage

Execution Metrics: How efficiently are your tests running?

Test execution time

Pass/fail rates

Automated vs. manual test ratio

Defect Metrics: How effective are your tests at finding bugs?

Defect detection rate

Defect escape rate

Mean time to detect defects

Reliability Metrics: How stable and maintainable is your test suite?

Test flakiness rate

Test script maintenance effort

Test environment stability

ROI Metrics: What value is your test automation providing?

Cost savings from automation

Time saved through automation

Improved time-to-market

Performance Metrics: How does your software perform under test?

System response time

Resource utilization

Concurrent user load

In the following sections, we'll dive deep into each of these categories, exploring how to measure these metrics, what they mean for your testing process, and how to use them to drive continuous improvement in your software development lifecycle.

Test Coverage Metrics

Test coverage metrics are essential indicators of how thoroughly your software is being tested. They help identify gaps in your testing strategy and ensure that critical parts of your application are not overlooked. Let's explore the three primary types of test coverage metrics:

A. Code Coverage

Code coverage measures the percentage of your application's source code that is executed during testing. It's a quantitative measure that helps identify which parts of your codebase are exercised by your test suite and which parts remain untested.

Key Aspects of Code Coverage:

Types of Code Coverage:

Statement Coverage: Percentage of code statements executed

Branch Coverage: Percentage of code branches (e.g., if/else statements) executed

Function Coverage: Percentage of functions called during testing

Condition Coverage: Percentage of boolean sub-expressions evaluated

Measuring Code Coverage:

Use code coverage tools integrated with your testing framework (e.g., JaCoCo for Java, Istanbul for JavaScript)

Set up your CI/CD pipeline to generate code coverage reports automatically

Interpreting Code Coverage:

High coverage doesn't guarantee bug-free code, but low coverage often indicates inadequate testing

Aim for a balanced approach; 100% coverage is often impractical and may not be cost-effective

Industry standards vary, but 70-80% code coverage is often considered a good benchmark

Best Practices:

Focus on covering critical and complex parts of your codebase

Use code coverage data to identify untested areas and guide test creation

Combine code coverage with other metrics for a comprehensive view of test effectiveness

B. Feature Coverage

Feature coverage assesses how many of your application's features or functionalities are being tested by your automation suite. It ensures that your tests are aligned with the actual user experience and business requirements.

Key Aspects of Feature Coverage:

Defining Features:

Break down your application into distinct features or user stories

Include both major functionalities and smaller, supporting features

Measuring Feature Coverage:

Create a feature inventory or checklist

Track which features are covered by automated tests

Calculate the percentage of features with automated test coverage

Prioritizing Features:

Focus on business-critical features and core functionalities

Consider feature usage data to prioritize frequently used features

Best Practices:

Maintain a living document of features and their test coverage status

Regularly review and update feature coverage as new features are added

Aim for a balanced mix of unit, integration, and end-to-end tests for each feature

C. Requirements Coverage

Requirements coverage measures how well your automated tests verify that the software meets its specified requirements. It ensures that your testing efforts are aligned with the project's goals and stakeholder expectations.

Key Aspects of Requirements Coverage:

Types of Requirements:

Functional requirements: What the system should do

Non-functional requirements: How the system should perform (e.g., performance, security, usability)

Measuring Requirements Coverage:

Create a traceability matrix linking requirements to test cases

Calculate the percentage of requirements covered by automated tests

Use requirement management tools integrated with your test management system

Challenges in Requirements Coverage:

Dealing with changing or evolving requirements

Ensuring coverage of implicit or assumed requirements

Balancing coverage of functional and non-functional requirements

Best Practices:

Involve QA teams early in the requirements gathering process

Regularly review and update requirements coverage as the project evolves

Use a combination of automated and manual tests to ensure comprehensive requirements coverage

By focusing on these three aspects of test coverage - code, feature, and requirements - you can ensure a comprehensive approach to test automation. Remember that while high coverage is generally desirable, the quality and relevance of your tests are equally important. Strive for a balance between coverage metrics and other qualitative factors to create a robust and effective test automation strategy.

Test coverage metrics are essential indicators of how thoroughly your software is being tested. They help identify gaps in your testing strategy and ensure that critical parts of your application are not overlooked. Let's explore the three primary types of test coverage metrics:

A. Code Coverage

Code coverage measures the percentage of your application's source code that is executed during testing. It's a quantitative measure that helps identify which parts of your codebase are exercised by your test suite and which parts remain untested.

Key Aspects of Code Coverage:

Types of Code Coverage:

Statement Coverage: Percentage of code statements executed

Branch Coverage: Percentage of code branches (e.g., if/else statements) executed

Function Coverage: Percentage of functions called during testing

Condition Coverage: Percentage of boolean sub-expressions evaluated

Measuring Code Coverage:

Use code coverage tools integrated with your testing framework (e.g., JaCoCo for Java, Istanbul for JavaScript)

Set up your CI/CD pipeline to generate code coverage reports automatically

Interpreting Code Coverage:

High coverage doesn't guarantee bug-free code, but low coverage often indicates inadequate testing

Aim for a balanced approach; 100% coverage is often impractical and may not be cost-effective

Industry standards vary, but 70-80% code coverage is often considered a good benchmark

Best Practices:

Focus on covering critical and complex parts of your codebase

Use code coverage data to identify untested areas and guide test creation

Combine code coverage with other metrics for a comprehensive view of test effectiveness

B. Feature Coverage

Feature coverage assesses how many of your application's features or functionalities are being tested by your automation suite. It ensures that your tests are aligned with the actual user experience and business requirements.

Key Aspects of Feature Coverage:

Defining Features:

Break down your application into distinct features or user stories

Include both major functionalities and smaller, supporting features

Measuring Feature Coverage:

Create a feature inventory or checklist

Track which features are covered by automated tests

Calculate the percentage of features with automated test coverage

Prioritizing Features:

Focus on business-critical features and core functionalities

Consider feature usage data to prioritize frequently used features

Best Practices:

Maintain a living document of features and their test coverage status

Regularly review and update feature coverage as new features are added

Aim for a balanced mix of unit, integration, and end-to-end tests for each feature

C. Requirements Coverage

Requirements coverage measures how well your automated tests verify that the software meets its specified requirements. It ensures that your testing efforts are aligned with the project's goals and stakeholder expectations.

Key Aspects of Requirements Coverage:

Types of Requirements:

Functional requirements: What the system should do

Non-functional requirements: How the system should perform (e.g., performance, security, usability)

Measuring Requirements Coverage:

Create a traceability matrix linking requirements to test cases

Calculate the percentage of requirements covered by automated tests

Use requirement management tools integrated with your test management system

Challenges in Requirements Coverage:

Dealing with changing or evolving requirements

Ensuring coverage of implicit or assumed requirements

Balancing coverage of functional and non-functional requirements

Best Practices:

Involve QA teams early in the requirements gathering process

Regularly review and update requirements coverage as the project evolves

Use a combination of automated and manual tests to ensure comprehensive requirements coverage

By focusing on these three aspects of test coverage - code, feature, and requirements - you can ensure a comprehensive approach to test automation. Remember that while high coverage is generally desirable, the quality and relevance of your tests are equally important. Strive for a balance between coverage metrics and other qualitative factors to create a robust and effective test automation strategy.

Ship bug-free software, 200% faster, in 20% testing budget. No coding required

Ship bug-free software, 200% faster, in 20% testing budget. No coding required

Ship bug-free software, 200% faster, in 20% testing budget. No coding required

Test Execution Metrics

Test execution metrics provide insights into the efficiency and effectiveness of your testing process. They help you understand how quickly your tests run, how reliable they are, and how well you're balancing automated and manual testing efforts. Let's explore three key test execution metrics:

A. Test Execution Time

Test execution time measures how long it takes to run your automated test suite. This metric is crucial for maintaining an efficient CI/CD pipeline and ensuring quick feedback on code changes.

Key Aspects of Test Execution Time:

Importance:

Faster test execution enables more frequent testing and faster development cycles

Long-running tests can become a bottleneck in the development process

Measuring Test Execution Time:

Use built-in timing features in your test framework or CI/CD tool

Track execution time for individual tests, test suites, and the entire test run

Monitor trends over time to identify slowdowns

Strategies for Optimizing Test Execution Time:

Parallelize test execution where possible

Implement test segmentation and prioritization

Optimize test environment setup and teardown

Regularly refactor and optimize slow tests

Best Practices:

Set baseline execution times and alert on significant deviations

Balance thoroughness with execution speed

Consider time-boxing long-running tests or moving them to separate test cycles

B. Test Case Pass/Fail Rate

The pass/fail rate measures the proportion of your automated tests that pass or fail in each test run. This metric helps gauge the stability of your application and the reliability of your test suite.

Key Aspects of Pass/Fail Rate:

Calculating Pass/Fail Rate:

(Number of Passed Tests / Total Number of Tests) * 100

Track pass/fail rates for individual test suites and the overall test run

Interpreting Pass/Fail Rates:

High pass rates generally indicate stable code and reliable tests

Sudden drops in pass rates may signal introduced bugs or test environment issues

Consistently low pass rates might indicate unstable features or flaky tests

Addressing Failed Tests:

Implement a triage process to quickly assess and categorize test failures

Distinguish between application bugs, test bugs, and environmental issues

Track trends in failure types to identify systemic issues

Best Practices:

Set target pass rates and monitor trends over time

Implement automated notifications for test failures

Maintain a "zero tolerance" policy for test failures in critical areas

Regularly review and update tests to reduce false positives and negatives

C. Automated vs. Manual Test Ratio

This metric compares the number of automated tests to manual tests in your testing strategy. It helps you assess your progress in test automation and identify areas that might benefit from further automation.

Key Aspects of Automated vs. Manual Test Ratio:

Calculating the Ratio:

(Number of Automated Tests / Total Number of Tests) * 100

Track ratios for different types of tests (e.g., unit, integration, UI)

Benefits of a Higher Automation Ratio:

Increased test coverage and execution frequency

Reduced manual testing effort and human error

Faster feedback on code changes

Considerations for Automation:

Not all tests are suitable for automation (e.g., exploratory testing, UX evaluation)

Automation requires upfront investment in time and resources

Automated tests need maintenance as the application evolves

Strategies for Improving Automation Ratio:

Identify repetitive, high-value test cases for automation

Implement automation at multiple levels (unit, integration, UI)

Use behavior-driven development (BDD) to create automated tests from specifications

Invest in training and tools to enable more team members to contribute to test automation

Best Practices:

Set targets for automation ratios based on project needs and constraints

Regularly review manual tests for automation opportunities

Maintain a balance between automated and manual testing to leverage the strengths of both approaches

Track the ROI of test automation to justify continued investment

By focusing on these test execution metrics - execution time, pass/fail rate, and automation ratio - you can gain valuable insights into the efficiency and effectiveness of your testing process. These metrics will help you identify bottlenecks, improve test reliability, and optimize your balance between automated and manual testing efforts. Remember that while these metrics are powerful tools, they should be interpreted in the context of your specific project needs and used alongside other qualitative assessments to drive continuous improvement in your testing strategy.

Test execution metrics provide insights into the efficiency and effectiveness of your testing process. They help you understand how quickly your tests run, how reliable they are, and how well you're balancing automated and manual testing efforts. Let's explore three key test execution metrics:

A. Test Execution Time

Test execution time measures how long it takes to run your automated test suite. This metric is crucial for maintaining an efficient CI/CD pipeline and ensuring quick feedback on code changes.

Key Aspects of Test Execution Time:

Importance:

Faster test execution enables more frequent testing and faster development cycles

Long-running tests can become a bottleneck in the development process

Measuring Test Execution Time:

Use built-in timing features in your test framework or CI/CD tool

Track execution time for individual tests, test suites, and the entire test run

Monitor trends over time to identify slowdowns

Strategies for Optimizing Test Execution Time:

Parallelize test execution where possible

Implement test segmentation and prioritization

Optimize test environment setup and teardown

Regularly refactor and optimize slow tests

Best Practices:

Set baseline execution times and alert on significant deviations

Balance thoroughness with execution speed

Consider time-boxing long-running tests or moving them to separate test cycles

B. Test Case Pass/Fail Rate

The pass/fail rate measures the proportion of your automated tests that pass or fail in each test run. This metric helps gauge the stability of your application and the reliability of your test suite.

Key Aspects of Pass/Fail Rate:

Calculating Pass/Fail Rate:

(Number of Passed Tests / Total Number of Tests) * 100

Track pass/fail rates for individual test suites and the overall test run

Interpreting Pass/Fail Rates:

High pass rates generally indicate stable code and reliable tests

Sudden drops in pass rates may signal introduced bugs or test environment issues

Consistently low pass rates might indicate unstable features or flaky tests

Addressing Failed Tests:

Implement a triage process to quickly assess and categorize test failures

Distinguish between application bugs, test bugs, and environmental issues

Track trends in failure types to identify systemic issues

Best Practices:

Set target pass rates and monitor trends over time

Implement automated notifications for test failures

Maintain a "zero tolerance" policy for test failures in critical areas

Regularly review and update tests to reduce false positives and negatives

C. Automated vs. Manual Test Ratio

This metric compares the number of automated tests to manual tests in your testing strategy. It helps you assess your progress in test automation and identify areas that might benefit from further automation.

Key Aspects of Automated vs. Manual Test Ratio:

Calculating the Ratio:

(Number of Automated Tests / Total Number of Tests) * 100

Track ratios for different types of tests (e.g., unit, integration, UI)

Benefits of a Higher Automation Ratio:

Increased test coverage and execution frequency

Reduced manual testing effort and human error

Faster feedback on code changes

Considerations for Automation:

Not all tests are suitable for automation (e.g., exploratory testing, UX evaluation)

Automation requires upfront investment in time and resources

Automated tests need maintenance as the application evolves

Strategies for Improving Automation Ratio:

Identify repetitive, high-value test cases for automation

Implement automation at multiple levels (unit, integration, UI)

Use behavior-driven development (BDD) to create automated tests from specifications

Invest in training and tools to enable more team members to contribute to test automation

Best Practices:

Set targets for automation ratios based on project needs and constraints

Regularly review manual tests for automation opportunities

Maintain a balance between automated and manual testing to leverage the strengths of both approaches

Track the ROI of test automation to justify continued investment

By focusing on these test execution metrics - execution time, pass/fail rate, and automation ratio - you can gain valuable insights into the efficiency and effectiveness of your testing process. These metrics will help you identify bottlenecks, improve test reliability, and optimize your balance between automated and manual testing efforts. Remember that while these metrics are powerful tools, they should be interpreted in the context of your specific project needs and used alongside other qualitative assessments to drive continuous improvement in your testing strategy.

Defect Metrics

Defect metrics are crucial indicators of the effectiveness of your testing process and the overall quality of your software. They help you understand how well your testing efforts are identifying issues, how many problems are slipping through to production, and how quickly you're able to find defects. Let's explore three key defect metrics:

A. Defect Detection Rate (DDR)

The Defect Detection Rate measures the number of defects identified during the testing phase compared to the total number of defects found in the system, including those discovered after release.

Key Aspects of Defect Detection Rate:

Calculation: DDR = (Defects found during testing / Total defects) * 100

Importance:

High DDR indicates effective testing processes

Helps in assessing the quality of testing efforts

Provides insights into the maturity of the development process

Interpreting DDR:

A higher DDR is generally better, indicating that more defects are caught before release

Industry benchmarks vary, but a DDR of 85% or higher is often considered good

Trends in DDR over time can be more informative than absolute values

Strategies to Improve DDR:

Implement diverse testing techniques (e.g., unit, integration, system testing)

Use both static (code reviews, static analysis) and dynamic testing methods

Continuously refine and update test cases based on new defect patterns

Encourage a "shift-left" testing approach to find defects earlier in the development cycle

Best Practices:

Track DDR across different testing phases and types of defects

Use DDR in conjunction with other metrics for a comprehensive quality assessment

Regularly review and analyze defects to identify areas for improvement in testing

B. Defect Escape Rate (DER)

The Defect Escape Rate measures the proportion of defects that are not caught during the testing phase and "escape" into production.

Key Aspects of Defect Escape Rate:

Calculation: DER = (Defects found after release / Total defects) * 100 Note: DER is essentially the inverse of DDR (DER = 100% - DDR)

Importance:

Indicates the effectiveness of the testing process in preventing defects from reaching end-users

Helps in assessing the potential risk and cost associated with post-release defects

Interpreting DER:

A lower DER is better, indicating fewer defects reaching production

High DER might suggest inadequate test coverage or ineffective testing strategies

Strategies to Reduce DER:

Enhance test coverage, particularly for critical and high-risk areas

Implement robust regression testing to prevent reintroduction of fixed defects

Use production monitoring and quick feedback loops to catch escaped defects early

Conduct thorough user acceptance testing (UAT) before release

Best Practices:

Categorize escaped defects by severity and impact

Analyze root causes of escaped defects to improve testing processes

Set target DER levels and track progress over time

Use DER as a key input for release decisions

C. Mean Time to Detect Defects (MTTD)

MTTD measures the average time between when a defect is introduced into the system and when it is detected.

Key Aspects of Mean Time to Detect Defects:

Calculation: MTTD = Sum of (Detection Time - Introduction Time) for all defects / Total number of defects

Importance:

Indicates the efficiency of your defect detection process

Helps in assessing the impact of testing strategies on defect discovery

Lower MTTD generally leads to lower cost of defect resolution

Interpreting MTTD:

Shorter MTTD is generally better, indicating faster defect detection

MTTD should be analyzed in context with the complexity of defects and the system

Strategies to Reduce MTTD:

Implement continuous testing throughout the development lifecycle

Use automated testing to increase testing frequency and coverage

Employ techniques like Test-Driven Development (TDD) to catch defects at the source

Leverage AI and machine learning for predictive defect detection

Best Practices:

Track MTTD for different types of defects and phases of testing

Use MTTD trends to assess the impact of changes in testing processes

Combine MTTD with other metrics like defect severity for comprehensive analysis

Consider the trade-off between MTTD and testing resources/costs

By focusing on these defect metrics - Defect Detection Rate, Defect Escape Rate, and Mean Time to Detect Defects - you can gain valuable insights into the effectiveness of your testing processes and the overall quality of your software. These metrics help you identify areas for improvement, justify investments in testing resources, and make informed decisions about release readiness.

Remember that while these metrics provide valuable quantitative data, they should be interpreted in the context of your specific project and organizational goals. Use them as part of a balanced scorecard approach, combining them with other metrics and qualitative assessments to drive continuous improvement in your software quality assurance efforts.

Defect metrics are crucial indicators of the effectiveness of your testing process and the overall quality of your software. They help you understand how well your testing efforts are identifying issues, how many problems are slipping through to production, and how quickly you're able to find defects. Let's explore three key defect metrics:

A. Defect Detection Rate (DDR)

The Defect Detection Rate measures the number of defects identified during the testing phase compared to the total number of defects found in the system, including those discovered after release.

Key Aspects of Defect Detection Rate:

Calculation: DDR = (Defects found during testing / Total defects) * 100

Importance:

High DDR indicates effective testing processes

Helps in assessing the quality of testing efforts

Provides insights into the maturity of the development process

Interpreting DDR:

A higher DDR is generally better, indicating that more defects are caught before release

Industry benchmarks vary, but a DDR of 85% or higher is often considered good

Trends in DDR over time can be more informative than absolute values

Strategies to Improve DDR:

Implement diverse testing techniques (e.g., unit, integration, system testing)

Use both static (code reviews, static analysis) and dynamic testing methods

Continuously refine and update test cases based on new defect patterns

Encourage a "shift-left" testing approach to find defects earlier in the development cycle

Best Practices:

Track DDR across different testing phases and types of defects

Use DDR in conjunction with other metrics for a comprehensive quality assessment

Regularly review and analyze defects to identify areas for improvement in testing

B. Defect Escape Rate (DER)

The Defect Escape Rate measures the proportion of defects that are not caught during the testing phase and "escape" into production.

Key Aspects of Defect Escape Rate:

Calculation: DER = (Defects found after release / Total defects) * 100 Note: DER is essentially the inverse of DDR (DER = 100% - DDR)

Importance:

Indicates the effectiveness of the testing process in preventing defects from reaching end-users

Helps in assessing the potential risk and cost associated with post-release defects

Interpreting DER:

A lower DER is better, indicating fewer defects reaching production

High DER might suggest inadequate test coverage or ineffective testing strategies

Strategies to Reduce DER:

Enhance test coverage, particularly for critical and high-risk areas

Implement robust regression testing to prevent reintroduction of fixed defects

Use production monitoring and quick feedback loops to catch escaped defects early

Conduct thorough user acceptance testing (UAT) before release

Best Practices:

Categorize escaped defects by severity and impact

Analyze root causes of escaped defects to improve testing processes

Set target DER levels and track progress over time

Use DER as a key input for release decisions

C. Mean Time to Detect Defects (MTTD)

MTTD measures the average time between when a defect is introduced into the system and when it is detected.

Key Aspects of Mean Time to Detect Defects:

Calculation: MTTD = Sum of (Detection Time - Introduction Time) for all defects / Total number of defects

Importance:

Indicates the efficiency of your defect detection process

Helps in assessing the impact of testing strategies on defect discovery

Lower MTTD generally leads to lower cost of defect resolution

Interpreting MTTD:

Shorter MTTD is generally better, indicating faster defect detection

MTTD should be analyzed in context with the complexity of defects and the system

Strategies to Reduce MTTD:

Implement continuous testing throughout the development lifecycle

Use automated testing to increase testing frequency and coverage

Employ techniques like Test-Driven Development (TDD) to catch defects at the source

Leverage AI and machine learning for predictive defect detection

Best Practices:

Track MTTD for different types of defects and phases of testing

Use MTTD trends to assess the impact of changes in testing processes

Combine MTTD with other metrics like defect severity for comprehensive analysis

Consider the trade-off between MTTD and testing resources/costs

By focusing on these defect metrics - Defect Detection Rate, Defect Escape Rate, and Mean Time to Detect Defects - you can gain valuable insights into the effectiveness of your testing processes and the overall quality of your software. These metrics help you identify areas for improvement, justify investments in testing resources, and make informed decisions about release readiness.

Remember that while these metrics provide valuable quantitative data, they should be interpreted in the context of your specific project and organizational goals. Use them as part of a balanced scorecard approach, combining them with other metrics and qualitative assessments to drive continuous improvement in your software quality assurance efforts.

Reliability Metrics

Reliability metrics are crucial for assessing the consistency and dependability of your test automation suite. They help you understand how stable your tests are, how much effort is required to maintain them, and how reliable your test environment is. Let's explore three key reliability metrics:

A. Test Flakiness Rate

Test flakiness refers to the phenomenon where a test sometimes passes and sometimes fails without any changes to the code or test environment. The flakiness rate measures the proportion of tests that exhibit this inconsistent behavior.

Test Flakiness Rate = (Number of Flaky Tests / Total Number of Tests) * 100

High flakiness rates reduce trust in the test suite, can mask real issues, and waste developer time. Common causes include asynchronous operations, time dependencies, resource contention, and external dependencies.

To reduce flakiness:

Implement retry mechanisms and use explicit waits instead of fixed sleep times

Ensure proper test isolation and data cleanup

Mock external dependencies when possible

Best practices include setting a target flakiness rate (e.g., <1% of tests), implementing monitoring to detect flaky tests, and prioritizing their investigation and fixing.

B. Test Script Maintenance Effort

This metric measures the time and resources required to keep your test scripts up-to-date and functioning correctly as your application evolves.

High maintenance effort can slow down development and reduce the ROI of automation. Factors affecting maintenance effort include script complexity, frequency of application changes, and quality of initial test design.

To reduce maintenance effort:

Implement modular and reusable test components

Use data-driven testing and robust element locator strategies

Employ proper abstraction layers (e.g., Page Object Model)

Set benchmarks for acceptable maintenance effort (e.g., <20% of total testing time) and regularly review and update test scripts alongside application changes.

C. Test Environment Stability

This metric assesses the reliability and consistency of your test environments, which is crucial for obtaining accurate and repeatable test results.

Unstable environments can lead to false test results and wasted time. Common stability issues include inconsistent configurations, resource constraints, data inconsistencies, and network issues.

To improve environment stability:

Implement infrastructure-as-code and use containerization for consistent provisioning

Implement proper data management and reset procedures

Monitor resource usage and implement environment health checks

Best practices include defining standard configurations for test environments, implementing automated setup and teardown processes, and using production-like data and configurations in test environments.

By focusing on these reliability metrics, you can significantly improve the dependability and efficiency of your test automation suite. These metrics help you identify areas where your testing process may be fragile or inefficient, allowing you to take targeted actions to enhance overall test reliability.

Remember that improving reliability is an ongoing process. Regularly monitor these metrics, set improvement goals, and adjust your strategies as needed. A reliable test automation suite not only saves time and resources but also builds confidence in your software quality assurance process, ultimately leading to faster, more dependable software releases.

Reliability metrics are crucial for assessing the consistency and dependability of your test automation suite. They help you understand how stable your tests are, how much effort is required to maintain them, and how reliable your test environment is. Let's explore three key reliability metrics:

A. Test Flakiness Rate

Test flakiness refers to the phenomenon where a test sometimes passes and sometimes fails without any changes to the code or test environment. The flakiness rate measures the proportion of tests that exhibit this inconsistent behavior.

Test Flakiness Rate = (Number of Flaky Tests / Total Number of Tests) * 100

High flakiness rates reduce trust in the test suite, can mask real issues, and waste developer time. Common causes include asynchronous operations, time dependencies, resource contention, and external dependencies.

To reduce flakiness:

Implement retry mechanisms and use explicit waits instead of fixed sleep times

Ensure proper test isolation and data cleanup

Mock external dependencies when possible

Best practices include setting a target flakiness rate (e.g., <1% of tests), implementing monitoring to detect flaky tests, and prioritizing their investigation and fixing.

B. Test Script Maintenance Effort

This metric measures the time and resources required to keep your test scripts up-to-date and functioning correctly as your application evolves.

High maintenance effort can slow down development and reduce the ROI of automation. Factors affecting maintenance effort include script complexity, frequency of application changes, and quality of initial test design.

To reduce maintenance effort:

Implement modular and reusable test components

Use data-driven testing and robust element locator strategies

Employ proper abstraction layers (e.g., Page Object Model)

Set benchmarks for acceptable maintenance effort (e.g., <20% of total testing time) and regularly review and update test scripts alongside application changes.

C. Test Environment Stability

This metric assesses the reliability and consistency of your test environments, which is crucial for obtaining accurate and repeatable test results.

Unstable environments can lead to false test results and wasted time. Common stability issues include inconsistent configurations, resource constraints, data inconsistencies, and network issues.

To improve environment stability:

Implement infrastructure-as-code and use containerization for consistent provisioning

Implement proper data management and reset procedures

Monitor resource usage and implement environment health checks

Best practices include defining standard configurations for test environments, implementing automated setup and teardown processes, and using production-like data and configurations in test environments.

By focusing on these reliability metrics, you can significantly improve the dependability and efficiency of your test automation suite. These metrics help you identify areas where your testing process may be fragile or inefficient, allowing you to take targeted actions to enhance overall test reliability.

Remember that improving reliability is an ongoing process. Regularly monitor these metrics, set improvement goals, and adjust your strategies as needed. A reliable test automation suite not only saves time and resources but also builds confidence in your software quality assurance process, ultimately leading to faster, more dependable software releases.

ROI Metrics

Return on Investment (ROI) metrics are crucial for justifying the investment in test automation and demonstrating its value to stakeholders. These metrics help quantify the benefits of automation in terms of cost savings, time efficiency, and faster product delivery. Let's explore three key ROI metrics:

A. Cost Savings from Automation

This metric measures the financial benefits of implementing test automation compared to manual testing.

Calculation: Cost Savings = (Cost of Manual Testing - Cost of Automated Testing) / Cost of Manual Testing * 100

To accurately assess cost savings:

Consider all costs associated with both manual and automated testing:

Labor costs (testers, developers, QA engineers)

Tool and infrastructure costs

Training and upskilling costs

Factor in long-term savings:

Reduced need for manual regression testing

Faster bug detection and resolution

Decreased cost of fixing issues in production

Account for the initial investment in automation:

Setup costs for tools and infrastructure

Time spent creating and stabilizing initial test suites

Best practices for maximizing and measuring cost savings:

Start with high-value, frequently executed tests for maximum ROI

Regularly review and optimize your test suite to maintain efficiency

Use analytics tools to track and report on cost savings over time

Remember that while initial costs may be higher for automation, long-term savings can be substantial. It's important to consider the total cost of ownership over the lifespan of your project or product.

B. Time Saved Through Automation

This metric quantifies the time efficiency gained through test automation compared to manual testing processes.

Calculation: Time Saved = (Time for Manual Testing - Time for Automated Testing) / Time for Manual Testing * 100

To effectively measure and maximize time savings:

Track time spent on various testing activities:

Test execution

Test case creation and maintenance

Bug reporting and verification

Consider indirect time savings:

Faster feedback to developers

Reduced time spent on repetitive tasks

Ability to run tests outside of business hours

Factor in the time investment for automation:

Initial script development

Maintenance and updates to automated tests

Strategies to increase time savings:

Prioritize automation of time-consuming manual tests

Implement parallel test execution where possible

Use test data generation tools to reduce setup time

As your automation suite matures, you should see increasing time savings. However, it's crucial to continually optimize your automated tests to maintain and improve efficiency over time.

C. Improved Time-to-Market

This metric assesses how test automation impacts your ability to release products or features more quickly.

While more challenging to quantify directly, you can measure improved time-to-market by tracking:

Release cycle duration:

Time from feature completion to production deployment

Frequency of releases (e.g., monthly to weekly)

Testing cycle time:

Duration of regression testing cycles

Time to complete full test suites

Defect metrics in relation to release speed:

Defect detection rate during testing phases

Number of post-release defects

To leverage automation for faster time-to-market:

Integrate automated tests into your CI/CD pipeline for continuous validation

Implement smoke tests and critical path testing for quick go/no-go decisions

Use automation to support exploratory testing, allowing manual testers to focus on high-value activities

Best practices for measuring and improving time-to-market:

Set baseline measurements before implementing automation

Track release metrics over time to demonstrate trends

Gather feedback from product and development teams on the impact of faster testing cycles

Remember that improved time-to-market isn't just about speed – it's about delivering high-quality products more frequently. Automation should support faster releases while maintaining or improving product quality.

By focusing on these ROI metrics – Cost Savings, Time Saved, and Improved Time-to-Market – you can clearly demonstrate the value of your test automation efforts. These metrics provide tangible evidence of the benefits of automation, helping to justify continued investment and support for your testing initiatives.

When presenting these metrics to stakeholders, consider combining them with qualitative benefits such as improved product quality, increased test coverage, and enhanced team morale due to reduced repetitive work. This holistic view will provide a comprehensive understanding of the full value that test automation brings to your organization.

Remember that realizing the full ROI of test automation is a journey. Initial results may be modest, but with continued optimization and expansion of your automation strategy, the benefits will compound over time, leading to significant long-term value for your organization.

Return on Investment (ROI) metrics are crucial for justifying the investment in test automation and demonstrating its value to stakeholders. These metrics help quantify the benefits of automation in terms of cost savings, time efficiency, and faster product delivery. Let's explore three key ROI metrics:

A. Cost Savings from Automation

This metric measures the financial benefits of implementing test automation compared to manual testing.

Calculation: Cost Savings = (Cost of Manual Testing - Cost of Automated Testing) / Cost of Manual Testing * 100

To accurately assess cost savings:

Consider all costs associated with both manual and automated testing:

Labor costs (testers, developers, QA engineers)

Tool and infrastructure costs

Training and upskilling costs

Factor in long-term savings:

Reduced need for manual regression testing

Faster bug detection and resolution

Decreased cost of fixing issues in production

Account for the initial investment in automation:

Setup costs for tools and infrastructure

Time spent creating and stabilizing initial test suites

Best practices for maximizing and measuring cost savings:

Start with high-value, frequently executed tests for maximum ROI

Regularly review and optimize your test suite to maintain efficiency

Use analytics tools to track and report on cost savings over time

Remember that while initial costs may be higher for automation, long-term savings can be substantial. It's important to consider the total cost of ownership over the lifespan of your project or product.

B. Time Saved Through Automation

This metric quantifies the time efficiency gained through test automation compared to manual testing processes.

Calculation: Time Saved = (Time for Manual Testing - Time for Automated Testing) / Time for Manual Testing * 100

To effectively measure and maximize time savings:

Track time spent on various testing activities:

Test execution

Test case creation and maintenance

Bug reporting and verification

Consider indirect time savings:

Faster feedback to developers

Reduced time spent on repetitive tasks

Ability to run tests outside of business hours

Factor in the time investment for automation:

Initial script development

Maintenance and updates to automated tests

Strategies to increase time savings:

Prioritize automation of time-consuming manual tests

Implement parallel test execution where possible

Use test data generation tools to reduce setup time

As your automation suite matures, you should see increasing time savings. However, it's crucial to continually optimize your automated tests to maintain and improve efficiency over time.

C. Improved Time-to-Market

This metric assesses how test automation impacts your ability to release products or features more quickly.

While more challenging to quantify directly, you can measure improved time-to-market by tracking:

Release cycle duration:

Time from feature completion to production deployment

Frequency of releases (e.g., monthly to weekly)

Testing cycle time:

Duration of regression testing cycles

Time to complete full test suites

Defect metrics in relation to release speed:

Defect detection rate during testing phases

Number of post-release defects

To leverage automation for faster time-to-market:

Integrate automated tests into your CI/CD pipeline for continuous validation

Implement smoke tests and critical path testing for quick go/no-go decisions

Use automation to support exploratory testing, allowing manual testers to focus on high-value activities

Best practices for measuring and improving time-to-market:

Set baseline measurements before implementing automation

Track release metrics over time to demonstrate trends

Gather feedback from product and development teams on the impact of faster testing cycles

Remember that improved time-to-market isn't just about speed – it's about delivering high-quality products more frequently. Automation should support faster releases while maintaining or improving product quality.

By focusing on these ROI metrics – Cost Savings, Time Saved, and Improved Time-to-Market – you can clearly demonstrate the value of your test automation efforts. These metrics provide tangible evidence of the benefits of automation, helping to justify continued investment and support for your testing initiatives.

When presenting these metrics to stakeholders, consider combining them with qualitative benefits such as improved product quality, increased test coverage, and enhanced team morale due to reduced repetitive work. This holistic view will provide a comprehensive understanding of the full value that test automation brings to your organization.

Remember that realizing the full ROI of test automation is a journey. Initial results may be modest, but with continued optimization and expansion of your automation strategy, the benefits will compound over time, leading to significant long-term value for your organization.

Performance Metrics

Performance metrics are crucial for ensuring that your application can handle expected loads while maintaining responsiveness and efficiency. These metrics help you identify performance bottlenecks, optimize resource usage, and ensure a smooth user experience. Let's explore three key performance metrics:

A. System Response Time

System response time measures how quickly your application responds to user requests or actions. It's typically measured in milliseconds (ms) or seconds (s) and can be broken down into server processing time and network latency. When analyzing response time, consider both average response time and percentile response times (e.g., 90th, 95th, 99th percentile) to identify outliers and worst-case scenarios.

Factors affecting response time include application code efficiency, database query performance, network conditions, and server resources. To optimize response time, consider implementing caching mechanisms, optimizing database queries and indexing, using content delivery networks (CDNs) for static assets, and implementing asynchronous processing for time-consuming tasks.

Best practices include setting performance baselines and thresholds for different types of operations, monitoring response times across various system components to identify bottlenecks, and using automated performance tests to simulate real-world scenarios.

B. Resource Utilization

Resource utilization measures how efficiently your application uses system resources such as CPU, memory, disk I/O, and network bandwidth. When monitoring resource utilization, focus on CPU usage, memory consumption, disk I/O operations, and network utilization.

To effectively manage resource utilization, establish baseline usage for normal operations, set up alerts for abnormal consumption patterns, and regularly review utilization trends to inform capacity planning. Optimization strategies include implementing efficient algorithms and data structures, optimizing database queries and indexing, using caching to reduce repeated computations or data fetches, and considering scaling strategies based on resource constraints.

C. Concurrent User Load

Concurrent user load measures how well your application performs under various levels of simultaneous user activity. This metric is typically assessed through different types of load testing, including stress testing, spike testing, and endurance testing.

During load testing, track metrics such as response times under different load levels, error rates and types of errors encountered, resource utilization at various concurrency levels, and throughput (requests processed per second). To optimize for concurrent user load, consider implementing efficient connection pooling, using caching mechanisms to reduce database load, implementing asynchronous processing for non-critical operations, and exploring auto-scaling solutions for cloud-based applications.

Best practices include defining realistic concurrent user scenarios based on expected usage patterns, gradually increasing load to identify performance degradation points, and regularly performing load tests, especially before major releases or expected traffic spikes.

Implementing Performance Metrics in Test Automation

To effectively incorporate performance metrics into your test automation strategy:

Integrate performance tests into your continuous integration pipeline, setting performance budgets and failing builds if thresholds are exceeded.

Generate automated performance reports after each test run and use visualization tools to track performance trends over time.

Conduct comparative analysis by comparing performance metrics across different versions or configurations and using historical data to identify performance regressions.

Ensure test environments closely mimic production settings and account for differences when interpreting results.

By focusing on these performance metrics – System Response Time, Resource Utilization, and Concurrent User Load – you can ensure that your application not only functions correctly but also performs efficiently under various conditions. These metrics provide valuable insights into your application's scalability, efficiency, and user experience.

Remember that performance testing and optimization is an ongoing process. As your application evolves and user behavior changes, continually reassess your performance metrics and adjust your testing strategies accordingly. By integrating performance testing into your overall test automation strategy, you can catch and address performance issues early in the development cycle, leading to more robust and efficient applications.

Performance metrics are crucial for ensuring that your application can handle expected loads while maintaining responsiveness and efficiency. These metrics help you identify performance bottlenecks, optimize resource usage, and ensure a smooth user experience. Let's explore three key performance metrics:

A. System Response Time

System response time measures how quickly your application responds to user requests or actions. It's typically measured in milliseconds (ms) or seconds (s) and can be broken down into server processing time and network latency. When analyzing response time, consider both average response time and percentile response times (e.g., 90th, 95th, 99th percentile) to identify outliers and worst-case scenarios.

Factors affecting response time include application code efficiency, database query performance, network conditions, and server resources. To optimize response time, consider implementing caching mechanisms, optimizing database queries and indexing, using content delivery networks (CDNs) for static assets, and implementing asynchronous processing for time-consuming tasks.

Best practices include setting performance baselines and thresholds for different types of operations, monitoring response times across various system components to identify bottlenecks, and using automated performance tests to simulate real-world scenarios.

B. Resource Utilization

Resource utilization measures how efficiently your application uses system resources such as CPU, memory, disk I/O, and network bandwidth. When monitoring resource utilization, focus on CPU usage, memory consumption, disk I/O operations, and network utilization.

To effectively manage resource utilization, establish baseline usage for normal operations, set up alerts for abnormal consumption patterns, and regularly review utilization trends to inform capacity planning. Optimization strategies include implementing efficient algorithms and data structures, optimizing database queries and indexing, using caching to reduce repeated computations or data fetches, and considering scaling strategies based on resource constraints.

C. Concurrent User Load

Concurrent user load measures how well your application performs under various levels of simultaneous user activity. This metric is typically assessed through different types of load testing, including stress testing, spike testing, and endurance testing.

During load testing, track metrics such as response times under different load levels, error rates and types of errors encountered, resource utilization at various concurrency levels, and throughput (requests processed per second). To optimize for concurrent user load, consider implementing efficient connection pooling, using caching mechanisms to reduce database load, implementing asynchronous processing for non-critical operations, and exploring auto-scaling solutions for cloud-based applications.

Best practices include defining realistic concurrent user scenarios based on expected usage patterns, gradually increasing load to identify performance degradation points, and regularly performing load tests, especially before major releases or expected traffic spikes.

Implementing Performance Metrics in Test Automation

To effectively incorporate performance metrics into your test automation strategy:

Integrate performance tests into your continuous integration pipeline, setting performance budgets and failing builds if thresholds are exceeded.

Generate automated performance reports after each test run and use visualization tools to track performance trends over time.

Conduct comparative analysis by comparing performance metrics across different versions or configurations and using historical data to identify performance regressions.

Ensure test environments closely mimic production settings and account for differences when interpreting results.

By focusing on these performance metrics – System Response Time, Resource Utilization, and Concurrent User Load – you can ensure that your application not only functions correctly but also performs efficiently under various conditions. These metrics provide valuable insights into your application's scalability, efficiency, and user experience.

Remember that performance testing and optimization is an ongoing process. As your application evolves and user behavior changes, continually reassess your performance metrics and adjust your testing strategies accordingly. By integrating performance testing into your overall test automation strategy, you can catch and address performance issues early in the development cycle, leading to more robust and efficient applications.

Conclusion

Test automation metrics are essential tools for measuring, optimizing, and demonstrating the value of your quality assurance efforts. By focusing on coverage, execution, defect, reliability, ROI, and performance metrics, you can gain a comprehensive view of your automation strategy's effectiveness. Remember that these metrics are not just numbers—they're insights that drive continuous improvement. Regularly analyze these metrics, adapt your strategies, and align them with your organization's goals. As you refine your approach, you'll not only improve software quality but also accelerate development cycles and deliver greater value to your stakeholders.

Test automation metrics are essential tools for measuring, optimizing, and demonstrating the value of your quality assurance efforts. By focusing on coverage, execution, defect, reliability, ROI, and performance metrics, you can gain a comprehensive view of your automation strategy's effectiveness. Remember that these metrics are not just numbers—they're insights that drive continuous improvement. Regularly analyze these metrics, adapt your strategies, and align them with your organization's goals. As you refine your approach, you'll not only improve software quality but also accelerate development cycles and deliver greater value to your stakeholders.

FAQs

Why should you choose Qodex.ai?

Why should you choose Qodex.ai?

Why should you choose Qodex.ai?

Top Test Automation Metrics: Essential KPIs to Boost QA Success and Efficiency

Ship bug-free software,

200% faster, in 20% testing budget

Remommended posts

Hire our AI Software Test Engineer

Experience the future of automation software testing.

Copyright © 2024 Qodex

|

All Rights Reserved

Hire our AI Software Test Engineer

Experience the future of automation software testing.

Copyright © 2024 Qodex

All Rights Reserved

Hire our AI Software Test Engineer

Experience the future of automation software testing.

Copyright © 2024 Qodex

|

All Rights Reserved